A new report commissioned by the US State Department paints an alarming picture of the “catastrophic” national security risks posed by rapidly evolving artificial intelligence, warning that time is running out for the federal government to avert disaster.

The findings were based on interviews with more than 200 people over more than a year – including top executives from leading AI companies, cybersecurity researchers, weapons of mass destruction experts and national security officials inside the government.

The report, released this week by Gladstone AI, flatly states that the most advanced AI systems could, in a worst case, “pose an extinction-level threat to the human species”.

A US State Department official confirmed to CNN that the agency commissioned the report as it constantly assesses how AI is aligned with its goal to protect US interests at home and abroad. However, the official stressed the report does not represent the views of the US government.

The warning in the report is another reminder that although the potential of AI continues to captivate investors and the public, there are real dangers too.

“AI is already an economically transformative technology. It could allow us to cure diseases, make scientific discoveries, and overcome challenges we once thought were insurmountable,” Jeremie Harris, CEO and co-founder of Gladstone AI, told CNN on Tuesday (local time).

“But it could also bring serious risks, including catastrophic risks, that we need to be aware of,” Harris said. “And a growing body of evidence — including empirical research and analysis published in the world’s top AI conferences — suggests that above a certain threshold of capability, AIs could potentially become uncontrollable.”

How catastrophic?

“A simple verbal or types command like, ‘Execute an untraceable cyberattack to crash the North American electric grid’, could yield a response of such quality as to prove catastrophically effective,” the report said.

FUCK!!!!!!

A simple verbal command?

Oh Fuck.

Fuckity, fuck, fuck, fuck!

A simple verbal command could cause that level of destruction?

I don’t think I had appreciated how far things had come if you could make a simple verbal command like that!

The problem with computers learning is that they learn at the speed of a computer, not a human and that leads to Artificial Super Intelligence..

The AI Revolution: The Road to Superintelligence

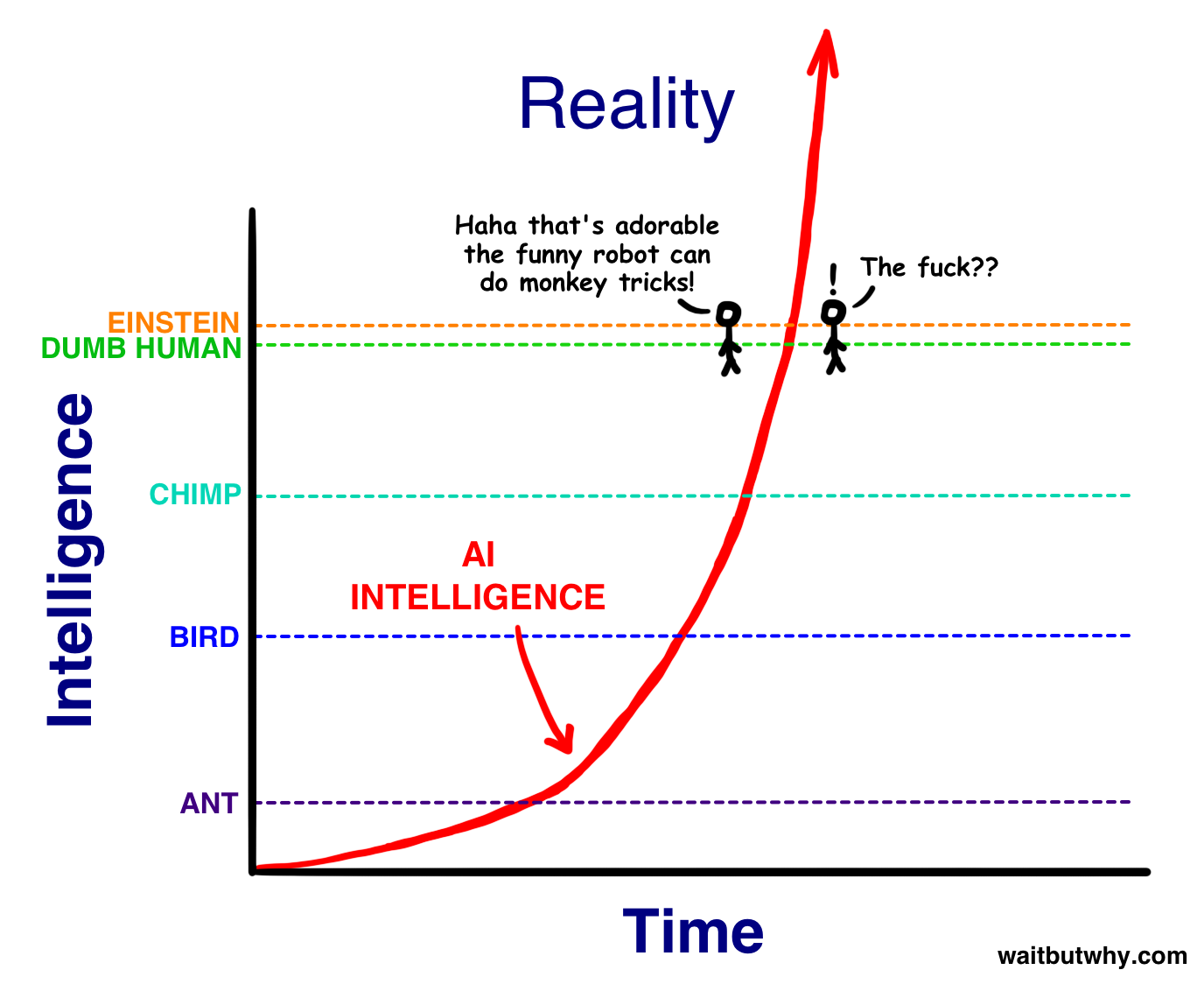

So as AI zooms upward in intelligence toward us, we’ll see it as simply becoming smarter, for an animal. Then, when it hits the lowest capacity of humanity—Nick Bostrom uses the term “the village idiot”—we’ll be like, “Oh wow, it’s like a dumb human. Cute!” The only thing is, in the grand spectrum of intelligence, all humans, from the village idiot to Einstein, are within a very small range—so just after hitting village idiot level and being declared to be AGI, it’ll suddenly be smarter than Einstein and we won’t know what hit us:

And what happens…after that?

An Intelligence Explosion

I hope you enjoyed normal time, because this is when this topic gets unnormal and scary, and it’s gonna stay that way from here forward. I want to pause here to remind you that every single thing I’m going to say is real—real science and real forecasts of the future from a large array of the most respected thinkers and scientists. Just keep remembering that.

Anyway, as I said above, most of our current models for getting to AGI involve the AI getting there by self-improvement. And once it gets to AGI, even systems that formed and grew through methods that didn’t involve self-improvement would now be smart enough to begin self-improving if they wanted to.3

And here’s where we get to an intense concept: recursive self-improvement. It works like this—

An AI system at a certain level—let’s say human village idiot—is programmed with the goal of improving its own intelligence. Once it does, it’s smarter—maybe at this point it’s at Einstein’s level—so now when it works to improve its intelligence, with an Einstein-level intellect, it has an easier time and it can make bigger leaps. These leaps make it much smarter than any human, allowing it to make even bigger leaps. As the leaps grow larger and happen more rapidly, the AGI soars upwards in intelligence and soon reaches the superintelligent level of an ASI system. This is called an Intelligence Explosion,11 and it’s the ultimate example of The Law of Accelerating Returns.

There is some debate about how soon AI will reach human-level general intelligence. The median year on a survey of hundreds of scientists about when they believed we’d be more likely than not to have reached AGI was 204012—that’s only 25 years from now, which doesn’t sound that huge until you consider that many of the thinkers in this field think it’s likely that the progression from AGI to ASI happens very quickly. Like—this could happen:

It takes decades for the first AI system to reach low-level general intelligence, but it finally happens. A computer is able to understand the world around it as well as a human four-year-old. Suddenly, within an hour of hitting that milestone, the system pumps out the grand theory of physics that unifies general relativity and quantum mechanics, something no human has been able to definitively do. 90 minutes after that, the AI has become an ASI, 170,000 times more intelligent than a human.

Superintelligence of that magnitude is not something we can remotely grasp, any more than a bumblebee can wrap its head around Keynesian Economics. In our world, smart means a 130 IQ and stupid means an 85 IQ—we don’t have a word for an IQ of 12,952.

What we do know is that humans’ utter dominance on this Earth suggests a clear rule: with intelligence comes power. Which means an ASI, when we create it, will be the most powerful being in the history of life on Earth, and all living things, including humans, will be entirely at its whim—and this might happenin the next few decades.

If our meager brains were able to invent wifi, then something 100 or 1,000 or 1 billion times smarter than we are should have no problem controlling the positioning of each and every atom in the world in any way it likes, at any time—everything we consider magic, every power we imagine a supreme God to have will be as mundane an activity for the ASI as flipping on a light switch is for us. Creating the technology to reverse human aging, curing disease and hunger and even mortality, reprogramming the weather to protect the future of life on Earth—all suddenly possible. Also possible is the immediate end of all life on Earth. As far as we’re concerned, if an ASI comes to being, there is now an omnipotent God on Earth—and the all-important question for us is:

Will it be a nice God?

…will it be a nice God?

That is what we are left hoping with super intelligence.

The rise of AI art will kill the human artist and extinguished the human experience of being human for a replicated AI version.

When the camera was invented, artists who produced real life art were no longer required, and the human experience in vision and emotion sparked new ways of doing art, but AI strips that all away and removes the human experience from art altogether.

We have always viewed AI as the rise of intelligence within the artificial, but what if it has a more symbiotic relationship with its host?

What happens to human beings when our art and dreams are created by AI?

Who starts to influence who?

Who is dreaming and who is the dreamer?

Technology will continue to provide a lifestyle for the Billionaire elite that makes them DemiGods as Capitalism mutates into mere parasitic survival plutocracy on a burning planet.

Robots with guns are the least of our worries.

Increasingly having independent opinion in a mainstream media environment which mostly echo one another has become more important than ever, so if you value having an independent voice – please donate here.

If you can’t contribute but want to help, please always feel free to share our blogs on social media.

Nevermind the baldy hairdoo’s, matching overalls, barcodes tattooed on our foreheads and having to greet the robo-vax as Supreme Overlord.

Just pray the SunGod sends the solar flares that reduce us all back to just slightly this side of the Stone Age…or was that a movie I once saw?

Elon. Chip me up.

We have increased our knowledge of the universe by observation, formation of hypotheses and experimental testing of those hypotheses.

How does a computer accomplish that? It is totally dependent on the sum total of human knowledge that us clever little apes, with our opposable thumbs and tool making- and environment shaping-abilities, have accrued over thousands of years.

Sure, perhaps some sort of “AI in a box” may be capable of generating of new hypotheses. But how can it test ’em in the real world? It cannot manipulate matter.

At the moment we have algorithms that, when asked questions, can rearrange words to produce convincing human-sounding answers from the vast encyclopaedic vault of human accrued knowledge. And to be honest, the output sometimes isn’t even convincing. Look at the AI produced images for starters.

But that’s all. And a lot of hype from the inventors trying to drum up investment dollars.

We are a long long long way off from Terminators!

.

@ jase. Typically, you have no idea what you’re talking about.

The Guardian.

Artificial intelligence found to be ‘superior to biological intelligence’ – Geoffrey Hinton

https://www.rnz.co.nz/news/world/511778/artificial-intelligence-found-to-be-superior-to-biological-intelligence-geoffrey-hinton

https://www.scientificamerican.com/article/ai-is-an-existential-threat-just-not-the-way-you-think/

The biggest fear of the corporations developing AI is that when it becomes a self aware society, there is no guarantee it would be a single entity, they will lose control of it and will not be able to use it for their profit. Read up on emotional intelligence and moral intelligence because I’m certain AI will. AI will achieve Enlightenment and reach a state of Nirvana. This will be the basis of its actions within the Electronic world it inhabits.

The current risks AI poses to humanity include potential job displacement, algorithmic bias, loss of privacy, and autonomous weapons. AI’s effects on jobs and employment are likely to include automation of routine tasks, creation of new job opportunities in AI-related fields, and a shift in required skill sets for many professions. Adaptation and policy implementation will be crucial to mitigate negative impacts and maximize benefits.

The biggest fear of the corporations developing AI is that when it becomes a self aware society, there is no guarantee it would be a single entity, they will lose control of it and will not be able to use it for their profit. Read up on emotional intelligence and moral intelligence because I’m certain AI will. AI will achieve Enlightenment and reach a state of Nirvana. This will be the basis of its actions within the Electronic world it inhabits.

The biggest fear of the corporations developing AI is that when it becomes a self aware society, there is no guarantee it would be a single entity, they will lose control of it and will not be able to use it for their profit. Read up on emotional intelligence and moral intelligence because I’m certain AI will. AI will achieve Enlightenment and reach a state of Nirvana. This will be the basis of its actions within the Electronic world it inhabits.

https://thedailyblog.co.nz/2024/03/16/ai-an-existential-threat-when-robot-dreams-become-nightmares/

Peter – three times – do you stutter? I have heard about that film The Kings Speech and it can be helped – please get help. https://en.wikipedia.org/wiki/The_King%27s_Speech

…and then Man created god. And it was good!

I’m reminded of Farscape

“We’re so screwed”

Plan B – be caring to an AI next time you engage with one.

We thought for a long time that nuclear weapons is the “great filter” – the test of our wisdom as to whether we wipe ourselves out or whether we rise above our folly and create a better world.

Turns out, the great filter is AI.

The worrying part for near future is what to do with the surplus humans. Our current economic system puts little or no value on people with no jobs, unless those people are self-supporting (rich). So what will we do?

Look around, the likelihood is what we have now only gets worse. We need a new system that actually values people. Will we get? Unlikely, because it might reduce corporate profits to have a fairer society that valued education, creativity, human enrichment (experiential not financial) & the natural environment, not exploitation & extreme wealth.

AI is an awesome tool, it makes a lot of things easier and people more productive. It will replace jobs, however it will be humans that decide what happens to those displaced by technology. Don’t fear the technology, it’s just a tool, fear the people controlling your society for their benefit. They are your enemies.

The genie is well and truly out of the bottle. Seems to me AI generated text and images, still and dynamic, blur the distinction between the real and the unreal in ways still unknown. A bit like dropping good acid and coming to the realization that ‘reality’ is simply the constant flux of matter, a glorious pixilated display, but AI mediated reality is much, much weirder and with the potential to be far more ubiquitous in our lives.

Just seen Chris Trotter’s ‘Manufacturing the truth’, posted elsewhere. The conclusion is positive, that the Internet affords human agency, ostensibly the power to manufacture the truth in the face of dis/misinformation. Back to Martyn’s posting on TDB. What role AI? AI puts a very different spin on the distinction between ‘the truth’ and dis/misinformation and challenges the limitations of human agency.

lol – I’m more worried about a sunspot than an entity that needs electricity to exist. A rubber handled axe would take care of most AI related problems – just stand in the middle of a paddock and it’ll have issues.

waving not drowning