Truth-Based Legal Revolution

“A nation that gives up control of its own credit gives up control of its own destiny.”

— John A. Lee

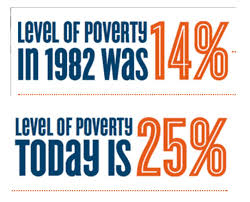

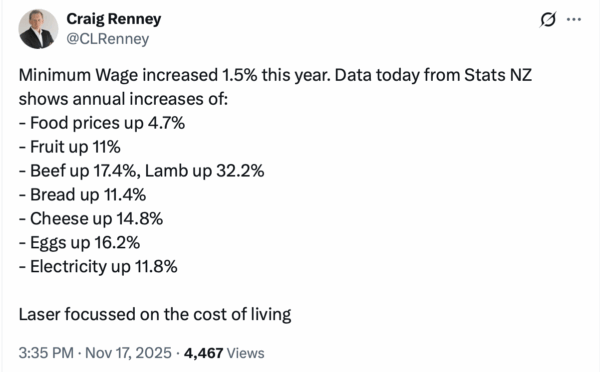

Treasury says Nz is financially unsustainable.

But New Zealand is not broke.

New Zealand is betrayed.

And nothing exposes that betrayal more clearly than the fact that we, a sovereign nation with our own central bank, are now forced to borrow our own currency from private banks – at interest – while being told by our politicians that “we can’t afford” homes, hospitals, rail, energy, or food security.

This is the quiet treason of our age. Colin James called it a “Quiet Revolution”, but it was really a theft. I think.

It didn’t happen with tanks or coups.

It happened with three Acts of Parliament driven by a treacherous Treasury, and a bipartisan political class too timid, too stupid, or too treacherous, to challenge the lies that stole our sovereignty. Its never to late to say sorry. But the trend is clear.

John A. Lee would have recognised where new zealand is going immediately. Fascism is capitalism when times get tough.

He fought these forces, and lost an arm doing it.

Today, they’ve simply changed costume and language. “Freedom and democracy” means corporate freedom and corporate control of our nations resources and finances.

New Zealand’s Tragedy is this. We are a People Betrayed by Its Own Representatives

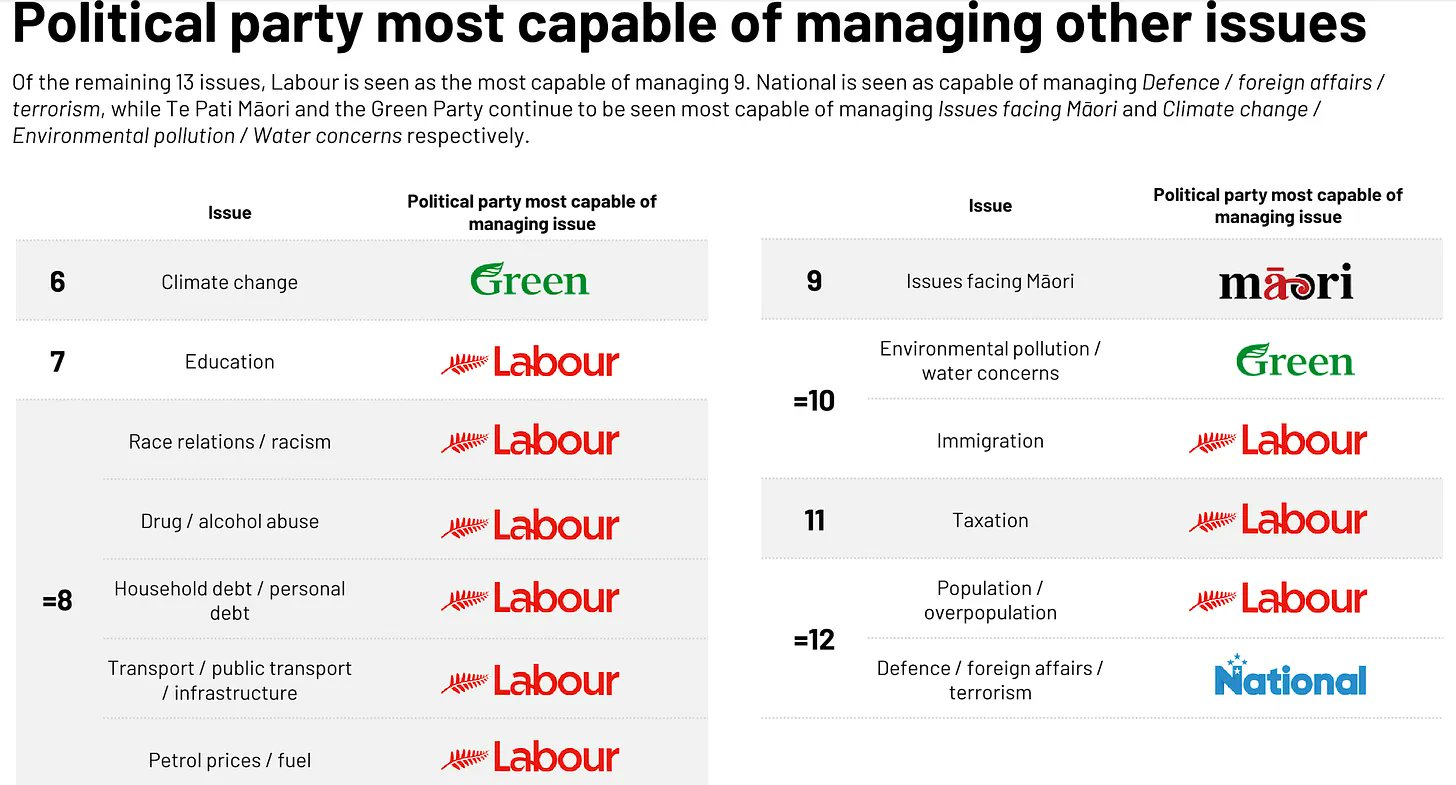

Modern New Zealand politics is not divided by Left and Right.

It is divided into

- those of us who believe a nation should govern itself,

- and our politicians, who act as local managers for foreign capital and private banks.

Sadly, the second group includes:

- David Seymour

- Christopher Luxon

- Chris Hipkins

- And every finance minister since 1984 who swallowed Treasury doctrine whole.

They quarrel on TV, but they serve the same master:

a financial system that extracts from New Zealand rather than builds it.

They differ culturally, but economically?

They are obedient servants of the same orthodoxy:

the belief that private banks, foreign creditors, and bond markets should determine how New Zealand invests in its future.

And in that obedience, they have quietly committed national treason – not by law, but by consequence. We have the american disease, and we are eating our seed.

The One Thing They Never Mention: Public Credit

John A. Lee understood something our modern leaders pretend not to know:

A sovereign nation does not borrow its own money.

A sovereign nation issues it.

Lee and Savage built thousands of state houses using the country’s own credit.

Muldoon used directed credit to build energy capacity.

The wartime government used sovereign credit to finance over 50% of national income during 1942–44 without foreign debt.

The historical record is clear:

Every period of sovereign credit built assets.

Every period of private or foreign credit built liabilities.

But in 1989, New Zealand committed economic suicide:

- The Public Finance Act forbade public credit.

- The Fiscal Responsibility Act banished public investment.

- The RBNZ Act removed national development from monetary policy.

We went from being the builders of our own nation

to being tenants of private banks.

That is the structural betrayal at the heart of modern politics. We no longer build our wealth.

An active state enables an eco system of entrepreneurship. in pursuit of strategic goals. Our state has instead largely let the market decide…

The Dismal Treason of Labour, National, and ACT

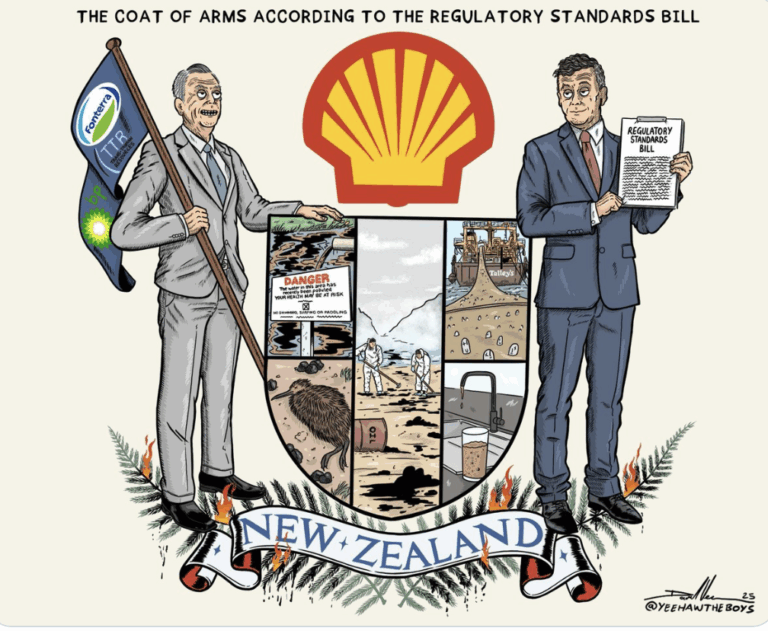

ACT – The Ideological Berserkers/corporate class spear tip

Founded by Roger Douglas, of ‘rogernomics’, he has since renounced act as ‘a party for the wealthy”. Which it is. A party for the wealth addicted might sum it best.

David Seymours economics make no sense. Classical economists like Adam Smith would damn his policies as lacking in virtue and prudence; as pure cartel.

In Lees language,

“Seymour mistakes bondage for freedom. He would sell the hospital to pay for the ambulance, or let a company own our water and air” …Or, to use a specific example, destroy a vibrant healthy local school lunch economy to pay a dodgy international corporate for inferior slop from overseas instead”

National – The chumocracy

Christopher Luxon speaks of fiscal responsibility but obeys the 1989 rulebook written by the very interests Lee fought. He’s Chris, from sales, and the public dont like him or his coalitions crony capitalist policies.

He governs from inside a shrinking cage built by Treasury, IMF doctrine, and private banking privilege – and lies to our faces frequently, it feels to me.

Lee’s verdict:

“Luxon confuses obedience with responsibility, and selfishness with public service”

Labour – Apostates of Their Own Legacy. Our Economic traitors.

The moral tragedy is Labour.

The party founded on the promise of public credit became the party that outlawed it.

Douglas, Prebble, Lange, and their heirs turned a workers’ movement into a managerial class for private finance. It’s repugnant.

Hipkins – a one time employees of nzs richest family- is the latest to inherit the machine and refuses to return it to purpose. Although we are assured that he is a ‘democratic socialist.’ But what does this matter? If democratic socialism is, at the end of the day, just putting a face on our continued debt bondage and decline into a class society of increased racial division?

Labour kept the colour but abandoned the purpose.

Lee would be merciless

“Labour forgot the poor and married the bankers. It kept the hymnbook, but sold the church.”

Its hard to trust your high priests, but by their actions we must judge them.

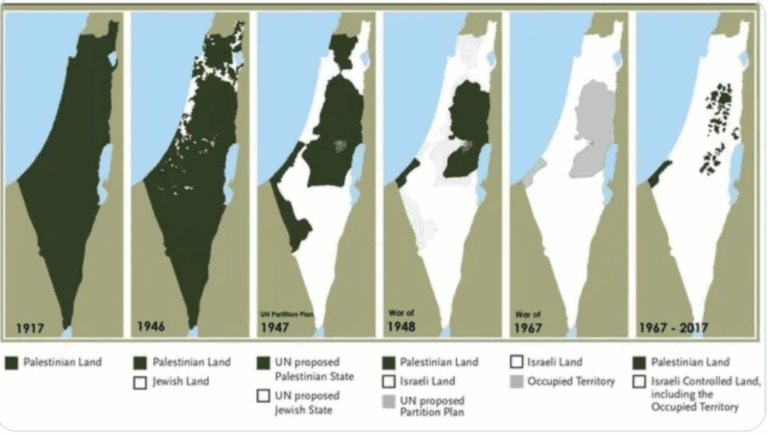

The Extraction Economy: A Quiet Colonisation

Over the past forty years, New Zealand’s wealth has been siphoned away through four great drains:

1. Private bank profit extraction

(>$10 billion per year, large portions repatriated offshore)**

2. Foreign debt servicing

(~200% of GDP external leverage)**

3. Low resource royalties

(2% from gold or 10% of net profits (but likely subject to gaming from transfer pricing), near-zero on water, low returns on minerals)

4. Transfer pricing by multinationals

(shifting profits offshore tax-free)

This is not a market economy.

This is a colonial economy with better branding.

We are not independent.

We have been recolonised by balance sheets.

As John A. Lee would put it:

“Foreign creditors get the cream,

the banks get the skim,

and the New Zealander doesn’t own the cow anymore.”

What LSAP Proved: The Money Was Always There

When COVID hit, the Reserve Bank created $60 billion out of thin air.

Not for homes.

Not for hospitals.

Not for infrastructure.

Not for clean energy.

No – for private bank balance sheets, and at a compounding interest of three billion dollars a year. Because we didn’t even create it for ourselves; we created it to pay for borrowing more from banks. At interest.

It’s mad. It’s irresponsible.

It’s economic treason.

Overnight, the ATM opened for banks.

But when citizens need public investment, the government says:

“Sorry, there’s no money.”

John A. Lee would have roared:

“You can conjure billions for bankers and helicopters in a heartbeat,

but not a roof for a family in need? Nor credit for a farmer to develop our economy? Nor our industry?

Who do you govern for?”

The Moral Line: Sovereignty is a Balance Sheet

This is not ideological.

This is not left or right.

This is moral.

A sovereign people should never be forced to rent their own future.

And no democratic government should be forbidden from investing in its own country.

The 1989–94 Acts created an undemocratic constitution where private banks rule the nation’s investment agenda.

That is the quiet and deadly treason of our lifetimes. Wrought by a treacherous treasury, an incompetent or perfidious labour, and a rabid right wing.

The Golden Kiwi Path: Reclaim What Is Ours

Here is the way forward:

1. Rewrite the Public Finance Act (1989)

→ Restore public credit creation**

2. Amend the RBNZ Act (2021)

→ Add national development to its mandate

3. Replace the Fiscal Responsibility Act (1994)

→ With an Intergenerational Balance Sheet Act**

4. Create a Resource Sovereignty Act

→ Make our minerals and water work for us, not foreign shareholders**

5. Establish a National Development Ledger

→ Show every Kiwi exactly how public credit builds real wealth**

This isn’t radical.

It’s what we used to do.

It’s how we built the country the first time.

And as Lee would remind us:

“You built a nation once.

You can build it again.”

The Call to Courage

New Zealand doesn’t lack resources.

It lacks self-belief. In fact, we dont even seem to know whats going on.

We don’t lack money.

We lack a sovereign imagination. Weve been chumped from Citizens into consumers and customers.

We don’t lack the tools – we lack leaders willing to pick them up.

It is time to end the forty-year capture of our Treasury.

It is time to restore public credit.

It is time to build again.

As John A. Lee would thunder:

“New Zealanders are not poor.

They are simply being charged interest on their own birthright.

Take back your Treasury – and take back your future”

Each one teach one, learn and share.

Tadhg Stopford is a historian and teacher.

Support change by purchasing your CBD hemp CBG at www.tigerdrops.co.nz