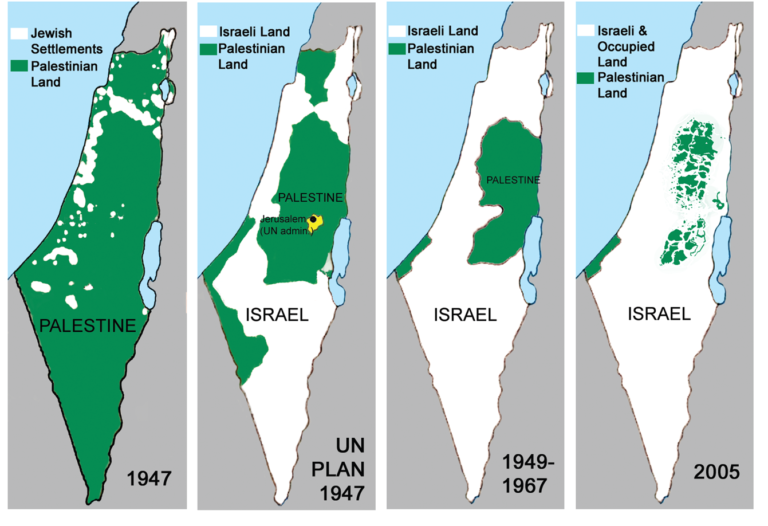

In Occupied Palestine

Zionism in practice

Israel’s Daily Toll on Palestinian Life, Limb, Liberty and Land

08:00, 14 April until 08:00, 15 April 2024

[Source of statistics: Palestinian Monitoring Group]

Gaza Strip

Air strikes: Heavy aerial bombardment on buildings, homes and many facilities.

Attacks: All over Gaza, there are air strikes, heavy gunfire, tank and artillery shelling, as well as missiles fired from Israeli forces and military occupation, especially in Khan Yunis. The Israeli Navycontinues to fire missiles, targeting facilities and buildings along the shoreline of the whole of Gaza.

Victims: 68 people killed in Gaza brings the total number of deaths since 7 October to at least 33,797. With another 94 wounded, that figure has risen to 76,465.

Key Highlights

About 800,000 people may be forced to evacuate if the Israeli military launches a ground incursion into Rafah, warned Jamie McGoldrick at the end of his mission as Humanitarian Coordinator ad interim.

About 90 per cent of the approximately 4,000 buildings located along Gaza’s eastern border have been destroyed or damaged, according to UNOSAT.

WFP and UNOPS deliver fuel to enable the operation of a bakery in Gaza city for the first time in months.

Large groups of Israeli settlers attacked at least 17 Palestinian villages after a boy from an Israeli settlement in Ramallah governorate went missing and was later found dead. Two Palestinians were killed, at least 45 were injured, at least 20 households were displaced when their homes were burnt down, and dozens of homes, vehicles and agricultural structures sustained damage.

Gaza Strip Updates

Israeli bombardment from the air, land, and sea continues to be reported across much of the Gaza Strip, resulting in further civilian casualties, displacement, and destruction of houses and other civilian infrastructure. Since 10 April, airstrikes, shelling and heavy fighting have reportedly been particularly intense in and around An Nuseirat Refugee Camp in Deir al Balah, with reports of the Israeli military detonating buildings, particularly in the new camp of An Nuseirat and northern An Nuseirat. Armed confrontations between the Israeli military and Palestinian armed groups also continue to be reported, especially in Deir al Balah and eastern Rafah.

Between the afternoon of 12 April and 11:30 on 15 April, according to the Ministry of Health (MoH) in Gaza, 163 Palestinians were killed, and 251 Palestinians were injured, including 68 killed and 94 injured in the past 24 hours. Between 7 October 2023 and 11:30 on 15 April 2024, at least 33,797 Palestinians were killed in Gaza and 76,465 Palestinians were injured, according to MoH in Gaza.

The following are among the deadly incidents between 11 and 14 April:

On 11 April, in the afternoon, seven Palestinians were reportedly killed when a market in An Nuseirat Refugee Camp, in Deir al Balah, was hit.

On 12 April, at about 9:10, at least 25 Palestinians were reportedly killed when a residential building in Ad Daraj neighbourhood, in Gaza city, was hit.

On 12 April, in the morning, at least one internally displaced person (IDP) was killed, and nine others were injured, when the UNRWA Elementary Boys “B” and Girls “A” school in the new camp in An Nuseirat Refugee Camp, in Deir al Balah, was hit. According to the Palestinian Civil Defense (PCD), their teams were not able to go inside the school, which hosts a high number of IDPs, to carry out rescue operations and transfer the killed and injured people.

On 12 April, at about 17:15, five Palestinians were reportedly killed, and 30 injured, when a house in Az Zarqa area, in Gaza city, was hit.

On 13 April, in the afternoon, at least three Palestinians were reportedly killed when a house in An Nuseirat Refugee Camp was hit.

On 13 April, at about 14:15, three Palestinians were reportedly killed when a house in the new camp of An Nuseirat Refugee Camp was hit.

On 13 April, at about 21:30, seven Palestinians, including at least three women, were reportedly killed when a house in Al Mufti land, north of An Nuseirat Refugee Camp, was hit.

On 14 April, at least one Palestinian woman was reportedly killed, and 23 others injured, by gunfire when a group of Palestinians were hit on Al Rashid Road while attempting to return to northern Gaza. Up to five fatalities were subsequently reported as a result of this incident.

Between the afternoons of 12 and 15 April, no Israeli soldiers were reported killed in Gaza. As of 15 April, 259 soldiers have been killed and 1,571 soldiers have been injured in Gaza since the beginning of the ground operation, according to the Israeli military. In addition, over 1,200 Israelis and foreign nationals, including 33 children, have been killed in Israel, the vast majority on 7 October. As of 15 April, Israeli authorities estimate that 133 Israelis and foreign nationals remain captive in Gaza, including fatalities whose bodies are withheld.

On 11 April, intensive airstrikes, shelling and fighting in and around the northern area of An Nuseirat Refugee Camp reportedly prompted residents of the new camp in An Nuseirat and people who live near Wadi Gaza to evacuate to the central and southern areas of An Nuseirat. On 12 April, in the evening, residents of northern An Nuseirat reportedly received calls from the Israeli military to evacuate their homes. Earlier on the same day, at about 13:30, a group of journalists was reportedly struck during their media coverage in An Nuseirat, severely injuring one journalist, who later had his foot amputated. According to the Gaza Government Media Office, 140 Palestinian journalists and media workers have been killed since the onset of hostilities. On 12 April, the Committee to Protect Journalists (CPJ) reported that it had preliminarily documented the death of 95 journalists and media workers since 7 October, including 90 Palestinians, 2 Israelis and 3 Lebanese, stating that “journalists in Gaza face particularly high risks as they try to cover the conflict during the Israeli ground assault, including devastating Israeli airstrikes, disrupted communications, supply shortages, and extensive power outages.”

About 800,000 people may be forced to evacuate if the Israeli military launches a ground incursion into Rafah, warned Jamie McGoldrick on 12 April in a briefing at the end of his mission as Humanitarian Co-ordinator ad interim. IDPs would face a high risk of unexploded ordnance (UXOs) en route to other areas, including Khan Younis and Deir al Balah, and aid workers would face the additional challenge of scaling up assistance when there are barely enough supplies to meet humanitarian needs under the current conditions, according to McGoldrick. Overall, McGoldrick explained, humanitarians are working in an extremely challenging and hostile operating environment characterised by an unpredictable notification system, lack of communications equipment, no hotline to directly contact the Israeli military at times of emergency, a security vacuum, and a range of access constraints such as access denials and long hours of waiting at checkpoints. Assistance to northern Gaza has been far from adequate, and “at no point in time in the last month and more had we had three or even two of those roads [linking southern and northern Gaza] working at the same time simultaneously,” added McGoldrick. Noting that several commitments have already been made by Israeli authorities to facilitate the scaling up of assistance, he stated that no visible difference can yet be seen on the ground, warning that “if we don’t have the chance to expand the delivery of aid in all parts of Gaza, but in particular to the north, then we’re going to face a catastrophe.”

Satellite imagery shows a sharp increase in the number of damaged and destroyed buildings within the one-kilometre stretch of land along the border of the Gaza Strip west of the Armistice Demarcation Line, according to a new preliminary analysis by UNOSAT. Analysis undertaken on 29 February 2024 shows that 90 per cent of 4,042 buildings within the zone have been destroyed or damaged, including 3,033 destroyed, 593 damaged (severe or moderately), and 416 had no damage visible through satellite imagery. This is compared with a 15 per cent damage level on 15 October 2023. Another UNOSAT analysis shows that the percentage of damaged crop fields, arable land and fallow land in the zone has also increased from 5.36 per cent in October 2023 to 33.13 per cent in February 2024. According to UNOSAT, “the decline in the health and density of the crops can be observed due to the impact of activities such as razing, heavy vehicle activity, bombing, shelling, and other conflict-related dynamics.”

Efforts to scale up live-saving assistance and restore the health system continue to be undermined by “limited access, mission denials and delays, self-distribution of supplies among desperate crowds, and ongoing security challenges,” according to WHO. On 13 April, WHO and its partners were able to reach the Al Ahli Hospital in northern Gaza, delivering nearly 20,000 litres of fuel, some of which will be provided to Al Sahaba Hospital. Three critical patients and two companions were also evacuated to field hospitals in Rafah. WHO highlighted that Al Ahli was originally an 80-bed facility that now accommodates over 120 patients, with benches and pews serving as makeshift beds after the library and chapel have been repurposed as inpatient departments. WHO warned that the overstretched facility can only provide minimal services, and many patients who are in critical condition, including children and people with severe trauma injuries and amputations, need to be immediately evacuated to receive treatment. The hospital is in dire need of additional beds, essential medicines and supplies, and urgently requires an international emergency medical team to support surgeries. On 15 April, the MoH in Gaza issued an appeal to all relevant institutions to help establish field hospitals in Gaza city and North Gaza governorate.

The dire situation across health facilities extends beyond northern Gaza, with a vacuum in healthcare provision created following the destruction of Nasser Medical Complex in southern Gaza, once the second-largest referral hospital in the Gaza Strip. Inspecting the non-functional facility, WHO reported that most of the equipment and machinery had been damaged, and the Limb Reconstruction Center, which WHO and its partners helped establish in 2019, is in ruins. The Nasser Medical Complex warehouse, which previously provided essential medicines and supplies to other hospitals south of Wadi Gaza, has also been destroyed. The UNFPA representative in the State of Palestine, Dominic Allen, explained that the maternal and neonatal clinic is now empty, “full of destruction and of the smell of death,” while during his previous visit in January it was full of pregnant women, midwives and doctors, as well as IDPs seeking refuge. Allen stressed the urgency of a ceasefire to be able to support the rehabilitation of life-saving maternal health services.

Calling for safe, sustained and scaled-up access to prevent famine, WFP and UNOPS delivered on 14 April fuel, enough for only four days, to enable a bakery in Gaza city to resume operations. Most of Gaza’s bakeries had been unable to operate due to conflict and lack of access, according to WFP. Since October 2023, the Gaza Strip has been under an electricity blackout after the Israeli authorities cut off the electricity supply and fuel reserves for Gaza’s only power plant were depleted. Kamel Ajour, a bakery owner in Gaza city, told WFP that “making bakeries work again will bring back life to the Gaza Strip.” WFP emphasized that, in recent months, northern Gaza “has largely been cut off from aid and has recorded the highest levels of catastrophic hunger in the world.” In mid-March 2024, the Global Nutrition Cluster reported a near doubling of malnutrition rates among children under the age of two in northern Gaza from 16 per cent in January to 31 per cent in February. According to the MoH in Gaza, 28 children have died of malnutrition and dehydration in northern Gaza since February, of whom 20 were under 12 months of age. Following the announcement of the bakery opening, crowds of people reportedly queued for the first time in months to buy bread, with 2.5 kilogrammes of bread reportedly priced at ILS5 (US$1.50), significantly lower than prices seen in previous months.

West Bank Update

On 15 April, Israeli forces, including Special Forces, raided Nablus city and besieged a house, killing a 17-year-old Palestinian boy, in an exchange of fire with Palestinians.

On 12 April, a 14-year-old boy from an Israeli settlement was reported missing near Al Mughayer village in Ramallah and, the following day was found dead near the Israeli settlement outpost of Malachei Hashalom. On 12 and 13 April, following the boy’s disappearance, large groups of Israeli settlers raided at least 17 Palestinian villages and communities in Ramallah, Nablus, and Jerusalem governorates, mainly those located along Roads 458 and 60, including Beitin, Deir Dibwan, Sinjil, Turmus’ayya, Duma, Qusra, As Sawiya, Mikhmas, Jaba’, Al Jalazoun Refugee Camp, Ein Siniya, Beit Furik, Rantis, Beitillu, Umm Safa, Al Mazra’a al Qibliyeh, and Ras Karkar. Two Palestinians, including a 17-year-old boy, were killed in Al Mughayer and Beitin, and at least 45 were injured. Some 40 per cent (18) of those injured were shot with live ammunition. Moreover, at least 20 households have been displaced when their homes were set on fire, and dozens of homes and vehicles were destroyed or sustained minor to moderate damage. Additionally, several agricultural structures sustained damage, with initial information indicating the killing of 50 heads of livestock and the stealing of another 120 sheep in Al Mughayyir village. Israeli forces reportedly closed the entrances to the targeted villages, tightening movement restrictions along Road 60 between Ramallah and Nablus governorates, and were witnessed firing rubber bullets and teargas cannisters toward Palestinians. During the last two years, OCHA has documented at least 100 settler attacks on Palestinian farmers and herders in the area of Al Mughayyir village, resulting in the displacement of two nearby Bedouin communities comprising 220 people, with the most recent displacement incident occurring on 15 October 2023.

West Bank

[Palestinian Monitoring Group]

Israeli settler attack – 4 wounded – home invasion: Ramallah – 18:10, armed Israelis, from the Beit El Occupation settlement, attacked a home near the al-Jalazoun refugee camp, opening fire on residents and wounding four people: Ibrahim Nassif, Ahmed Hazem Hamed, Muhammad Hazem Hamed and Ghadeer Younis.

Israeli Army attack – 1 wounded: Qalqiliya – 17:05, Israeli Occupation forces shot and wounded a man, Ibrahim Jasser Abdullah Muhammad Abdullah, as he was about to cross the Green Line near al-Taybeh.

Home invasions: Nablus – 22:25, Israeli Occupation forces raided the village of Duma and searched two houses.

Home invasion: Nablus – 01:00–02:05, Israeli forces raided Burqa village and searched a home.

Israeli police and settlers’ mosque violation: Jerusalem – 08:00, settler militants, escorted by Israeli police, invaded the Al-Aqsa Mosque compound and molested worshippers.

Occupation settler land–grabbing: Jerusalem – 18:00, Israelis, from the Adam Occupation settlement set up camps on land in Hizma, as well as in the village of Jaba.

Occupation settler violence: Ramallah – 09:35, Israeli Occupation settlers invaded pastoral land, in the village of al-Mugheir, beating up and injuring a man: Muhammad Zayed Abu Aliya.

Occupation settler violence: Ramallah – 12:15, Israeli settlers invaded the outskirts of Kafr Malik village and raided a workshop, beating up and injuring two workers.

Occupation settler population–control: Ramallah – 19:30, Israeli settlers prevented vehicles from passing through the Taybeh road junction.

Occupation settler stoning: Ramallah – 00:25, Israelis, from the Beit El Occupation settlement, stoned passing vehicles near the al-Mahkama checkpoint.

Occupation settler vandalism: Jenin – 18:15, Israelis, from the Shaked Occupation settlement, held up a vehicle and smashed its windows.

Occupation settler stoning: Jenin – 18:20, Israeli settlers stoned vehicles passing through the entrance to Silat al-Dahr.

Occupation settler beating: Tubas – 21:15, Israeli settlers closed the Maleh junction road in the Ein al-Hilweh area and beat up a woman: Fatima Alyan Zamel Daraghmeh.

Occupation settler stoning: Nablus – 22:50, Israeli settlers stoned a vehicle passing near the village of al-Lubban al-Sharqiya.

Occupation settler stoning – agricultural sabotage: Salfit – 22:10, Israeli Occupation settlers stoned a passing vehicle, in the Wadi al-Saber area of Qarawat Bani Hassan, vandalised a fence and uprooted 30 olive trees.

Occupation settler stoning: Salfit – 22:30, Israeli settlers stoned vehicles transiting through the Kafr al-Dik roundabout.

Occupation settler stoning – ambulance: Salfit – 23:00, Israeli settlers stoned an ambulance, near the Za’atara junction checkpoint.

Occupation settler stoning: Salfit – 23:15, Israeli settlers stoned a vehicle, near the Za’atara junction checkpoint.

Occupation settler land-grab: Jericho – 17:35, Israeli settlers established a new settlement outpost, in the spring area of al-Auja.

Occupation settlers beat-up farmer: Bethlehem – 16:45, Israelis, from the Efrat Occupation settlement, beat-up and hospitalised a farmer, Alaa Omar Abd Issa, working his land south of al-Khadr.

Occupation settler land-grab: Bethlehem – 19:30, Israeli settlers set up mobile homes on Taquo land.

Occupation settler agricultural sabotage: Hebron – 15:00, Israeli settlers invaded agricultural land, in the Kharouba area of al-Samou, and destroyed crops.

Occupation settler robbery: Hebron – 16:00, Israeli settlers seized a motor vehicle, in the Marqa Plain area of Yatta.

Occupation settler land-grab: Hebron – 20:20, Israeli settlers seized land in the Tuba area, east of Yatta, and set–up camp.

Raid – 2 injured in village school: Ramallah – 09:50, Israeli Occupation forces raided al-Mazra’a al-Sharqiya, injuring two people in an invasion of the Mohammed bin Rashid Boys’ School, during which they removed the front entrance to the school.

Raid: Ramallah – 13:00, Israeli Israeli Army raided and patrolled the village of Khirbet Abu Falah.

Raid: Ramallah – 13:00, Israeli troops raided and patrolled the village of Burqa.

Raid: Ramallah – 19:20, the Israeli military raided and patrolled the village of Umm Safa.

Raid: Ramallah – 19:25–22:35, Israeli soldiers raided and patrolled the village of al-Mugheir.

Raid: Ramallah – 02:00, Israeli Occupation forces raided the village of Kafr Ni’ma, taking prisoner two people.

Raid – 1 taken prisoner: Ramallah – 06:25, Israeli forces raided the town of al-Mazra’a al-Sharqiya, taking prisoner one person.

Raids: Jenin – 22:35–04:20, the Israeli Army raided and patrolled the villages of Arranah, Deir Ghazaleh, Arbouna and Faqua.

Raid – 1 taken prisoner: Jenin – 03:10–05:00, Israeli troops raided Ya’bad, taking prisoner one person.

Raid – theft and surveillance: Tubas – dawn, the Israeli Army raided the New Tubas Health Directorate in the city, storming and tampering with its contents as well as seizing a surveillance-camera.

Raid – 7 taken prisoner: Tulkarem – 23:30, Israeli troops raided Kafr al-Labad, taking prisoner seven people.

Raid – population–control: Tulkarem – 02:00, the Israeli military raided Anabta and ordered three people to report for Interrogation at Israeli Military Intelligence.

Raid – 1 taken prisoner: Tulkarem – 03:10, Israeli soldiers raided Deir al-Balah, taking prisoner one person.

Raid – 2 taken prisoner: Tulkarem – 03:50, Israeli Occupation forces raided Shufa village, taking prisoner two people.

Raid – 1 taken prisoner: Qalqiliya – 22:05–02:25, Israeli forces raided Azzun, taking prisoner one person.

Raid: Qalqiliya – 00:45–04:30, the Israeli Army raided and patrolled the city.

Raid: Qalqiliya – 04:35, Israeli troops, firing stun grenades, raided and patrolled Hablat.

Raid: Qalqiliya – 04:55, the Israeli military, firing stun grenades raided and patrolled the village of Ras Atiyah.

Raid – stun grenades fired: Qalqiliya – 04:55, Israeli soldiers, firing stun grenades, raided the village of Ras Atiya.

Raid: Nablus – 14:30, Israeli Occupation forces raided and patrolled the village of Duma.

Raid: Nablus – 15:55-17:15, Israeli forces raided and patrolled the village of Asira al-Qibliya.

Raid: Nablus – 15:55, the Israeli Army raided and patrolled Qusra.

Raid: Nablus – 19:00, Israeli troops raided and patrolled the city.

Raid – rubber-coated bullets and stun grenades fired: Nablus – 19:40, the Israeli military, firing rubber-coated bullets and stun grenades, raided and patrolled the village of Yatma.

Raid – villager beaten-up: Nablus – 22:00–01:05, Israeli soldiers raided the village of Tal and beat-up a resident: Muhammad Nasrallah Asida.

Raid – agricultural sabotage: Salfit – 18:50, Israeli Occupation forces raided Kafr al-Dik and destroyed an agricultural building.

Raid – stun grenades fired: Bethlehem – 21:00, Israeli forces fired stun grenades at people, near the Aida refugee camp.

Raid – surveillance: Hebron – 16:15, the Israeli Army raided the village of Hijrah, invaded the Southern Electricity Company and seized its surveillance-camera recordings.

Raid: Hebron – 23:10–01:30, Israeli troops raided and patrolled Beit Ummar.

Raid – rubber-coated bullets and stun grenades fired in refugee camp: Hebron – 23:30–00:30, the Israeli military, firing rubber-coated bullets and stun grenades, raided the al-Arroub refugeecamp.

TDB Recommends NewzEngine.com