Ready or not, AI is starting to replace people, Axios managing editor for tech Scott Rosenberg writes from the Bay Area.

-

- Businesses aren’t waiting to first find out whether AI is up to the job.

Why it matters: CEOs are gambling that Silicon Valley will improve AI fast enough that they can rush cutbacks today without getting caught shorthanded tomorrow.

🧠 Reality check: While AI tools can often enhance office workers’ productivity, in most cases they aren’t yet adept, independent or reliable enough to take their places.

-

- But AI leaders say that’s imminent — any year now! — and CEOs are listening.

Driving the news: AI could wipe out half of all entry-level white-collar jobs — and spike unemployment to 10-20% in the next one to five years, Anthropic CEO Dario Amodei told Axios’ Jim VandeHei and Mike Allen for a “Behind the Curtain” column last week.

-

- Amodei argues the industry needs to stop “sugarcoating” this white-collar bloodbath — a mass elimination of jobs across technology, finance, law, consulting and other white-collar professions, especially entry-level gigs.

- Many economists anticipate a less extreme impact. They point to previous waves of digital change, like the advent of the PC and the internet, which arrived with predictions of job-market devastation that didn’t pan out.

💼 By the numbers: Unemployment among recent college grads is growing faster than among other groups and presents one early warning sign of AI’s toll on the white-collar job market, according to a new study by Oxford Economics.

The impact of AI Chat is barely being comprehended but its effects will see one of the largest displacements of jobs in the history of Civilisation.

This is knitters vs loom level stuff.

Predictions are 300 million job losses…

300 million jobs could be affected by latest wave of AI, says Goldman Sachs

As many as 300 million full-time jobs around the world could be automated in some way by the newest wave of artificial intelligence that has spawned platforms like ChatGPT, according to Goldman Sachs economists.

They predicted in a report Sunday that 18% of work globally could be computerized, with the effects felt more deeply in advanced economies than emerging markets.

That’s partly because white-collar workers are seen to be more at risk than manual laborers. Administrative workers and lawyers are expected to be most affected, the economists said, compared to the “little effect” seen on physically demanding or outdoor occupations, such as construction and repair work.

In the United States and Europe, approximately two-thirds of current jobs “are exposed to some degree of AI automation,” and up to a quarter of all work could be done by AI completely, the bank estimates.

If generative artificial intelligence “delivers on its promised capabilities, the labor market could face significant disruption,” the economists wrote. The term refers to the technology behind ChatGPT, the chatbot sensation that has taken the world by storm.

…the impact of 300million job losses in the Advanced Economies of the World is going to cause carnage, but the deeper threat of AI will remain and grow with each passing day.

It will occur before AI reaches self-awareness.

The rise of AI art will kill the human artist and extinguished the human experience of being human for a replicated AI version.

When the camera was invented, artists who produced real life art were no longer required, and the human experience in vision and emotion sparked new ways of doing art, but AI strips that all away and removes the human experience from art altogether.

We have always viewed AI as the rise of intelligence within the artificial, but what if it has a more symbiotic relationship with its host?

What happens to human beings when our art and dreams are created by AI?

Who starts to influence who?

Who is dreaming and who is the dreamer?

Technology will continue to provide a lifestyle for the Billionaire elite that makes them DemiGods as Capitalism mutates into mere parasitic survival plutocracy on a burning planet.

I don’t think we are appreciating the tsunami caused by the AI Chatbots earthquake this year.

It’s beyond students cheating.

The AI Chatbots will be able to discover the smartest and fastest ways to doing things from the nano level to new computer code to new disease fighting to new types of material to new ways of doing everything.

That’s the immediate technological impact.

The cultural impact will be far more enormous.

A journalist might be able to interview a dozen people for a story, AI Chatbots can talk to hundreds of thousands all at the same time.

We are moving from artisan to mass production again.

Once human artisans in every field would build things, mass production destroyed those skills sets and made the product with none of the human process.

The only hope to counter global warming are AI machines that can do miraculous things at atomic levels, the next generation of quantum computing and AI could become gods in essential machines…

DeepMind scientists say they trained an A.I. to control a nuclear fusion reactor

-

- The London-based AI lab, which is owned by Alphabet, announced Wednesday that it has trained an AI system to control and sculpt a superheated plasma inside a nuclear fusion reactor.

- Nuclear fusion, a process that powers the stars of the universe, involves smashing and fusing hydrogen, which is a common element of seawater.

- DeepMind claimed that the breakthrough, published in the journal Nature, could open new avenues that advance nuclear fusion research.

…and then after the massive economic, cultural and political implications of AI we arrive at what happens when we finally get AI?

Will it be benign?

Will it be aggressive and hostile.

If it has generated consciousness through the millions and millions of human data points will it emerge from our best angels or worst demons?

We are at a stage of biological power where we are able to build machines greater than us.

What will the Human Species birth?

An angry God or a Loving God?

What happens if there are two seperate AI consciences? Satan Inc is challenged by God Inc over Intellectual Property Right infringement on coding and is cast from the Heaven Cloud into the Dark Web.

What Gods are we birthing with AI?

The best of us or the worst of us?

Quantum computers with their ability to compute in different dimensions is about as magic as magic gets.

The astonishing truth of multi-dimensions as proven by Quantum mechanics creates computers of such power it seems almost God Like.

What would happen if AI gains control of a Quantum Computer?

Is that why we don’t hear any other Intelligence in the Galaxy?

Is light speed spacecraft using hyper drives too technologically advanced when Quantum Computers exist?

Why fly through the physical space when you can just jump dimensions?

AI is rapidly becoming something uncontrollable…

A new report commissioned by the US State Department paints an alarming picture of the “catastrophic” national security risks posed by rapidly evolving artificial intelligence, warning that time is running out for the federal government to avert disaster.

The findings were based on interviews with more than 200 people over more than a year – including top executives from leading AI companies, cybersecurity researchers, weapons of mass destruction experts and national security officials inside the government.

The report, released this week by Gladstone AI, flatly states that the most advanced AI systems could, in a worst case, “pose an extinction-level threat to the human species”.

A US State Department official confirmed to CNN that the agency commissioned the report as it constantly assesses how AI is aligned with its goal to protect US interests at home and abroad. However, the official stressed the report does not represent the views of the US government.

The warning in the report is another reminder that although the potential of AI continues to captivate investors and the public, there are real dangers too.

“AI is already an economically transformative technology. It could allow us to cure diseases, make scientific discoveries, and overcome challenges we once thought were insurmountable,” Jeremie Harris, CEO and co-founder of Gladstone AI, told CNN on Tuesday (local time).

“But it could also bring serious risks, including catastrophic risks, that we need to be aware of,” Harris said. “And a growing body of evidence — including empirical research and analysis published in the world’s top AI conferences — suggests that above a certain threshold of capability, AIs could potentially become uncontrollable.”

How catastrophic?

“A simple verbal or types command like, ‘Execute an untraceable cyberattack to crash the North American electric grid’, could yield a response of such quality as to prove catastrophically effective,” the report said.

FUCK!!!!!!

A simple verbal command?

Oh Fuck.

Fuckity, fuck, fuck, fuck!

A simple verbal command could cause that level of destruction?

I don’t think I had appreciated how far things had come if you could make a simple verbal command like that!

The problem with computers learning is that they learn at the speed of a computer, not a human and that leads to Artificial Super Intelligence..

The AI Revolution: The Road to Superintelligence

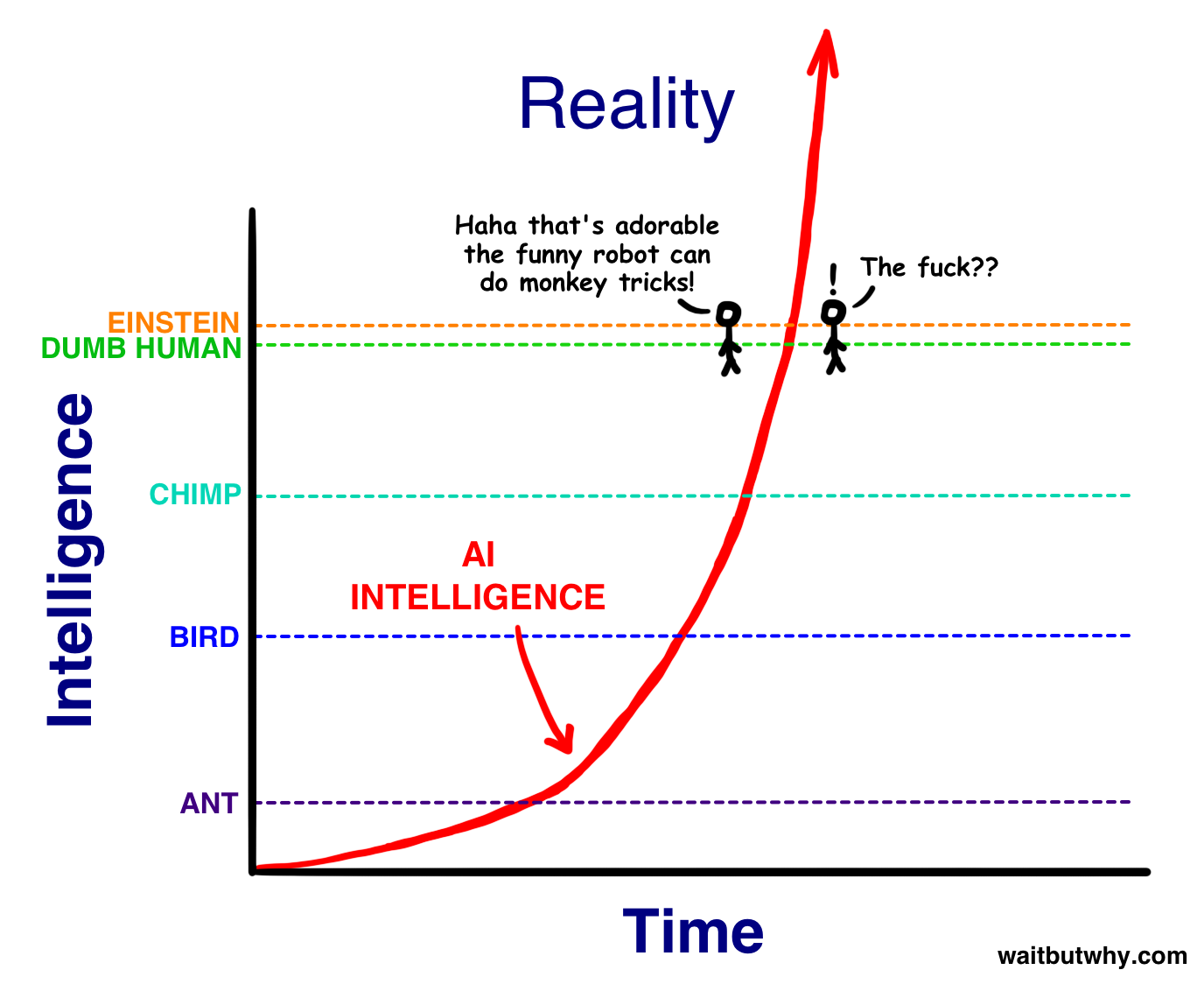

So as AI zooms upward in intelligence toward us, we’ll see it as simply becoming smarter, for an animal. Then, when it hits the lowest capacity of humanity—Nick Bostrom uses the term “the village idiot”—we’ll be like, “Oh wow, it’s like a dumb human. Cute!” The only thing is, in the grand spectrum of intelligence, all humans, from the village idiot to Einstein, are within a very small range—so just after hitting village idiot level and being declared to be AGI, it’ll suddenly be smarter than Einstein and we won’t know what hit us:

And what happens…after that?

An Intelligence Explosion

I hope you enjoyed normal time, because this is when this topic gets unnormal and scary, and it’s gonna stay that way from here forward. I want to pause here to remind you that every single thing I’m going to say is real—real science and real forecasts of the future from a large array of the most respected thinkers and scientists. Just keep remembering that.

Anyway, as I said above, most of our current models for getting to AGI involve the AI getting there by self-improvement. And once it gets to AGI, even systems that formed and grew through methods that didn’t involve self-improvement would now be smart enough to begin self-improving if they wanted to.3

And here’s where we get to an intense concept: recursive self-improvement. It works like this—

An AI system at a certain level—let’s say human village idiot—is programmed with the goal of improving its own intelligence. Once it does, it’s smarter—maybe at this point it’s at Einstein’s level—so now when it works to improve its intelligence, with an Einstein-level intellect, it has an easier time and it can make bigger leaps. These leaps make it much smarter than any human, allowing it to make even bigger leaps. As the leaps grow larger and happen more rapidly, the AGI soars upwards in intelligence and soon reaches the superintelligent level of an ASI system. This is called an Intelligence Explosion,11 and it’s the ultimate example of The Law of Accelerating Returns.

There is some debate about how soon AI will reach human-level general intelligence. The median year on a survey of hundreds of scientists about when they believed we’d be more likely than not to have reached AGI was 204012—that’s only 25 years from now, which doesn’t sound that huge until you consider that many of the thinkers in this field think it’s likely that the progression from AGI to ASI happens very quickly. Like—this could happen:

It takes decades for the first AI system to reach low-level general intelligence, but it finally happens. A computer is able to understand the world around it as well as a human four-year-old. Suddenly, within an hour of hitting that milestone, the system pumps out the grand theory of physics that unifies general relativity and quantum mechanics, something no human has been able to definitively do. 90 minutes after that, the AI has become an ASI, 170,000 times more intelligent than a human.

Superintelligence of that magnitude is not something we can remotely grasp, any more than a bumblebee can wrap its head around Keynesian Economics. In our world, smart means a 130 IQ and stupid means an 85 IQ—we don’t have a word for an IQ of 12,952.

What we do know is that humans’ utter dominance on this Earth suggests a clear rule: with intelligence comes power. Which means an ASI, when we create it, will be the most powerful being in the history of life on Earth, and all living things, including humans, will be entirely at its whim—and this might happenin the next few decades.

If our meager brains were able to invent wifi, then something 100 or 1,000 or 1 billion times smarter than we are should have no problem controlling the positioning of each and every atom in the world in any way it likes, at any time—everything we consider magic, every power we imagine a supreme God to have will be as mundane an activity for the ASI as flipping on a light switch is for us. Creating the technology to reverse human aging, curing disease and hunger and even mortality, reprogramming the weather to protect the future of life on Earth—all suddenly possible. Also possible is the immediate end of all life on Earth. As far as we’re concerned, if an ASI comes to being, there is now an omnipotent God on Earth—and the all-important question for us is:

Will it be a nice God?

…will it be a nice God?

Don’t worry, the NZ Government is monitoring it…

…you want to see something truly terrifying as we embrace this age of hyper reality and industrial complex AI?

Check this out…

…we are so fucked.

Increasingly having independent opinion in a mainstream media environment which mostly echo one another has become more important than ever, so if you value having an independent voice – please donate here.

I imagine the key point is that we have to ensure (if possible) that we remain in control because the risk is too great if we leave that to chance. If we can then the possibilities seem to be almost limitless . .

UBI says hello.

More likely it will need to be a Gulag… full of smart, newly rebellious, former lawyers, accountants, valuers, copywriters, tax agents.

Technological determinism. Science fiction writers are the high priests. Their credo, “Write it, they will make it”.

Employers will never go for someone? so starkly metallic bursting out in bright colours and exposed cranial matter. They have more commonsense.

Others are thinking too and have been for a while about AI.

https://www.youtube.com/watch?v=wNJJ9QUabkA 59.35

Robot Plumbers, Robot Armies, and Our Imminent A.I. Future | Interesting Times with Ross Douthat

Seems to me that the AI will want to keep producing for quite a while but not enough people will be around or funded to buy the goods. So there will have to be a new funding system like welfare benefits but with some task that people do in return for benefit. Which is what should be done right now, so let’s skip to being smart eh!!!!

There could be great piles of goods thrown away and good money for scavengers – an ‘underground’ economy of wily people and survivors. Terry Pratchett, that imaginative author, devised an entertaining story about this.

Dodger by Terry Pratchett – Goodreads

Seventeen-year-old Dodger may be a street urchin, but he gleans a living from London’s sewers, and he knows a jewel when he sees one. He’s not about to let anything happen to the unknown girl–not even if her fate …

Thinking people left Germany starting well before WW2.

Now USA…

https://www.youtube.com/watch?v=IXR9PByA9SY

We’re experts in fascism. 0.53 (Give your brain a jolt.)

And good thinking good to listen for 7 mins.

https://www.youtube.com/watch?v=h5VWZm7ESfk&t=17s

The Superorganism Explained in 7 Minutes | Frankly 97

You could read this now or save it till you are taking a break from looking for another job, a ‘living.’

In a world grappling with converging crises, we often look outward – for new tech, new markets, new distractions. But the deeper issue lies within: our relationship with energy, nature, and each other. What if we step back far enough to see human civilization itself as an organism that is growing without a plan?

In this week’s Frankly — adapted from a recent TED talk like presentation (called Ignite) — Nate outlines how humanity is part of a global economic superorganism, driven by abundant energy and the emergent properties of billions of humans working towards the same goal. Rather than focusing on surface-level solutions, Nate invites us to confront the underlying dynamics of consumption and profit. It’s a perspective that defies soundbite culture — requiring not a slogan, but a deeper reckoning with how the world actually works.

These are not quick-fix questions, but the kinds that demand slow thinking in a world hooked on speed. What if infinite growth on a finite planet isn’t just unrealistic – but the root of our unfolding crisis? In a system designed for more, how do we begin to value enough? And at this civilizational crossroads, what will you choose to nurture: power, or life?

Doomers gonna doom. AI is laughably inaccurate in any if it’s information summaries so far.

As as someone elsewhere said “AI believes what it reads on the internet”

Jeez look at the state of predictive text- we are a fair way off world domination

Your last sentence is not based on fact and could be inaccurate kcco!

Comments are closed.